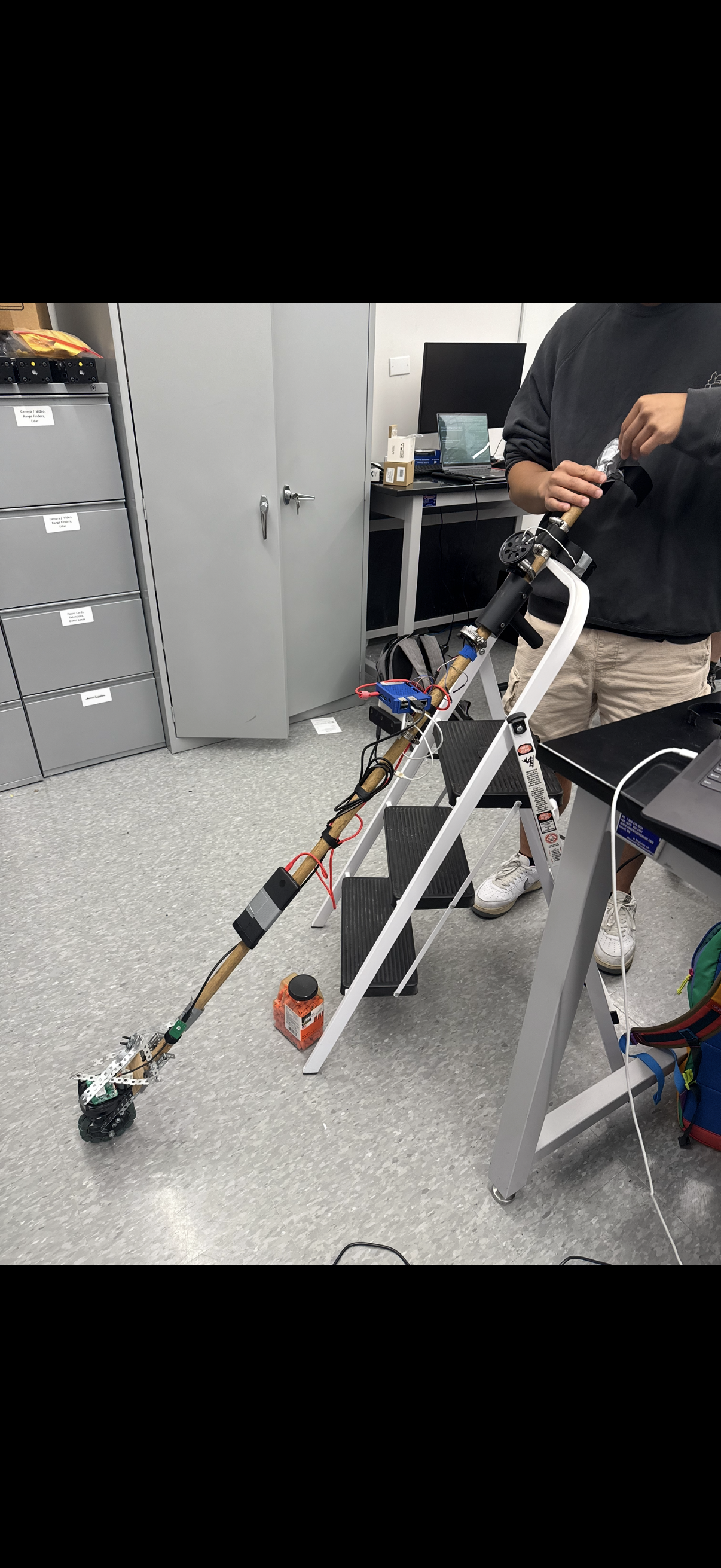

The smart cane prototype was successfully developed and presented to the class as part of

LSU's Assistive Robotics Course. The project demonstrated effective integration of multiple

sensor technologies and received positive feedback for its innovative approach to assistive

technology design.

Key achievements included successful obstacle detection within the optimized 1.5m range,

functional car detection using YOLO neural networks, and intuitive haptic feedback through

the moveable sleeve mechanism. The project showcased practical applications of machine learning

in accessibility technology, combining computer vision, LiDAR sensing, and haptic feedback

to create a more comprehensive navigation aid.

The dual-sensor approach addressed both immediate obstacle detection and broader environmental

awareness, providing users with enhanced confidence and safety during navigation. This work

contributes to the growing field of assistive robotics and demonstrates the importance of

user-centered design in accessibility technology.